Nvidia

Nvidia shows what it can do with neural rendering power

We go back to school to understand how Blackwell Architecture uses AI to improve games.

One of the aspects that many gamers are looking for is for video games to become more and more realistic. Since the leap to 3D, the aim has been to better represent the elements on screen, from characters and textures to space and physics. In recent years, technological advances have made it possible to create video games that are much closer to reality.

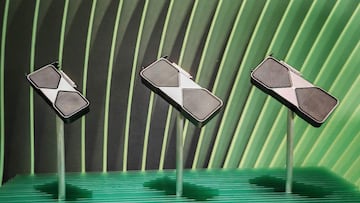

Last week, during CES 2025, Nvidia announced a new architecture that will debut with the RTX 50 series of graphics cards. During a lengthy closed-door session, the company explained how one of the key elements of this architecture, artificial intelligence, will improve what we see on screen thanks to neural rendering.

The Age of Programmable Neuro Shaders Begins

One of the first details mentioned was that there was no way to take advantage of a GPU’s tensor cores. This is about to change. But first, Tensor Cores enable mixed-precision computing, dynamically adjusting computations to accelerate performance while maintaining accuracy and providing enhanced safety. These are part of NVIDIA’s graphics cards and are responsible for processing data grouped into tensors. Tensors are mathematical objects that describe the relationship between other mathematical objects.

Nvidia has worked with Microsoft to make DirectX compatible with Cooperative Vectors, unlocking Tensor cores and allowing developers to use neural shaders. What does all this mean? From now on, programmers will be able to use artificial intelligence to work more efficiently with neural rendering, exploring aspects never before considered.

RTX Neural Materials

The new architecture allows the graphics card to take the shader code and textures associated with a material and generate a more accurate representation using a neural network built into the game engine. In one example, a statuette was shown with materials such as a gem, metals and silk. The difference between the standard material and the neural material is striking: the neural material looks more detailed and also requires three times less processing.

Another example showed us how the skin would improve. Previously, it was easy to render an opaque material such as wood. But translucent materials, such as ear cartilage, are a bit more complicated to render because of the way light bounces off them. With neural shaders, they can present a more realistic skin, which they have dubbed RTX Skin. In the demo you can see the Half-Life 2 headcrab with certain parts that let light through more realistically, which is a subtle but noticeable change.

RTX Neural Faces

The feeling of strangeness when seeing a human face that doesn’t seem quite real, known as the “Uncanny Valley,” is about to disappear. RTX Neural Faces, along with generative AI models, will be able to render increasingly realistic faces, adjusting to light and emotions, making video game characters more photorealistic.

More realistic and easier hair

If you’ve noticed that character hair has become an issue in recent generations, you’re not alone. Generating hair in video games has become not only challenging due to the number of polygons required for an accurate representation but also expensive. Each hair is represented by 3D triangular tubes, with approximately 6 triangles per segment, making 6 million triangles to accommodate and style. The new model under the Blackwell architecture allows for a hair that requires linear segments, but now with spheres, reducing information consumption, decreasing VRAM usage, and improving FPS rate.

The rise of the polygon in video games

During the presentation, Nvidia highlighted the evolution of model geometry. In 30 years, it has gone from the 10,000 polygons of a title like Virtua Fighter in the mid-90s, to the 50 million of Cyberpunk 2077, to the nearly 500 million triangles of Zorah, Nvidia’s demo to showcase its progress. As a result, ray tracing has become increasingly complex because of all the things it has to render. RTX Mega Geometry solves this problem by generating the meshes used for ray tracing without any loss of FPS. All these triangles are updated in real time, allowing for a fluid way of rendering light.

The evolution of DLSS 4

In gaming, there are three fundamental pillars of real-time graphics: image quality, responsiveness, and fluidity. In other words, 4K image, latency, and refresh rate. This has been an important area of research for Nvidia where they have made great strides in achieving a balance between these aspects thanks to AI.

If you’ve been using DLSS since its introduction in 2019, you’ve probably seen this progress, with over 80% of gamers with an RTX using it in over 540 games. However, this hasn’t been without its problems. Over the past six years, Nvidia has analyzed the bugs that have appeared when using this technology. Among the most common problems are flickering and ghosting, which are not so obvious to the naked eye, but are still noticeable. We have tried to find out why the models have these errors and have continued to train and add data in order to resolve them.

The result is DLSS 4, which achieves faster analysis and use of AI by now predicting what will happen. This is possible thanks to the Transformer Models that have been integrated and work in support of DLSS. These help to generate certain objects and reproduce them more accurately at a distance, such as fences or power lines, and even reduce the ghosting of a fan on the ceiling. This is achieved using multi-frame generation technology, which allows the game to anticipate what is happening on the screen.

Super Resolution is also part of these improvements. This means that 15 out of every 16 pixels are generated by the AI, making rendering 8 times faster. This helps to drive the three pillars mentioned above. While without DLSS a scene could be rendered at 27 fps with a latency of 71 ms, DLSS 4 can take us to 248 fps with a latency of 34 ms by using multiple frame generation and the Transformer model.

A total of 75 games and applications will be supported from Day 0 when DLSS 4 becomes available, with many more to come. For now, these games include Cyberpunk 2077, Dragon Age: The Veilguard, Alan Wake II, Star Wars Outlaws, Diablo IV, Indiana Jones and the Great Circle, and Hogwarts Legacy, among others.

In addition, the Nvidia application will be able to bring the latest DLSS technology to existing games with DLSS support. This will allow for the benefits of multi-frame technology, as well as testing the latest transformer models and DLSS Super Resolution.

Finally, other improvements will be introduced over time. For example, Nvidia Reflex, a technology that reduces latency in games, will be improved with the next version, as Frame Warp will offer a 75% faster response. Reflex 2 will appear in games such as The Finals and Valorant.

Doom: The Dark Ages - A Sneak Peek

To showcase the work that has been done with these new Nvidia technologies, part of the Doom: The Dark Ages team from ID Software took the stage. Over the past decade, the partnership between the two companies has accelerated, allowing the latest installments of Doom to take advantage of these technologies.

The demo showed a video rendered with path tracking, walking through the different environments that the game will bring, the technology is based on the patch that was integrated into Doom Eternal. This was used not only for the visual aspect of the game, but also as part of game elements such as surfaces and materials, creating different effects depending on what is being shot, and differentiating between materials such as metal or leather.

Follow MeriStation USA on X (formerly known as Twitter). Your video game and entertainment website for all the news, updates, and breaking news from the world of video games, movies, series, manga, and anime. Previews, reviews, interviews, trailers, gameplay, podcasts and more! Follow us now!